AWS Backup for EC2 instances defined by IAC (Terraform)

How do we recover an instance, but still maintain the ability to manage the resource with Terraform?

Context

We use AWS Backup to back up EC2 instances in our environments. We use Terraform, and deploy to 3 different AWS accounts, a dev, test, and prod one.

We use the same Terraform code in each account, so differentiation between accounts has to be using Terraform variable maps based on the environment, or SSM parameters (this is our preferred method).

Problem

AWS Backup takes routine snapshots of ALL the volumes attached to an instance and generating a single AMI with them. If we recovered this single AMI to an instance, we'd get the volumes attached, but they wouldn't be managed by Terraform, as Terraform has state and associated management data for each volume individually.

So, how do we recover an instance, but still maintain the ability to manage the resource with Terraform?

Restore directly from AWS Backup, reintegrate with Terraform state rm and Terraform import

This involves using AWS Backup the way it was intended, directly restoring an instance or volume, and then tweaking Terraform state to integrate the newly generated resources. This is seemingly the most intuitive path to take, however its pretty far from ideal. This method requires delicate statefile surgery, and is prone to mistakes - particularly when instances include lots of other resources like ENIs and EBS volumes that might still exist somewhere.

Use AWS Backup AMIs directly in Terraform

The second option is to forgo AWS Backup's restore feature entirely and hand the Backup AMI directly over to Terraform and run the pipeline, placing Terraform in charge of rebuilding and reattaching destroyed resources. The problem is that we still can't use the AMIs directly. Remember, AWS Backup generates AMIs based on all attached resources at the time of backup generation, where snapshots are generated and block device mappings get stored in the AMI based on this information. Restoring from this AMI will result in volumes getting generated out-of-band of Terraform state management, and should the instance have been attached to externally defined volumes that did not delete when the instance was terminated, then Terraform will attempt to reattach the existing volumes over top of volumes that get recreated and attached independently by the AWS Backup AMI, which usually causes a deployment error as the volumes clobber each other.

This gets us closer, as we aren't worrying about out-of-band instance profiles and ENIs being created, but some complicated statefile surgery and imports will be required in order to pull off a successful restore.

The least bad solution: Manage AMIs and snapshots in SSM parameters

The solution is to utilize SSM parameters to store mappings of AMIs to instances, and another SSM Parameter to store mappings of EBS volumes to snapshot ids. Terraform reads these parameters and restores instances and volumes based on these AMIS and snapshot ids, preserving the architecture and ensuring no infrastructure gets built outside of Terraform state. AWS Backup AMIS will need to be converted into AMIs that are compatible with this system by creating an AMI from only the root volume snapshot.

Every aws_instance resource block will need to have its ami uniquely defined in aws SSM parameters. Every aws_ebs_volume resource block will need a snapshot_id uniquely defined using SSM parameters as well. aws_instance resource blocks may not use an internal ebs_block_device statement within its resource block, as that already messes with Terraform state whenever externally defined aws_ebs_volume resources are attached to the instance, and will break the proposed mechanism for restoring instances and volumes.

Terraform code reference

Abbreviated example:

# EC2 Instance

resource "aws_instance" "example02" {

ami = nonsensitive(lookup(local.example_ami_assignments, "example02"))

instance_type = "c5.xlarge"

tags = {

Name = "example02"

}

}

# EBS Volume

resource "aws_ebs_volume" "example02" {

availability_zone = "us-west-2a"

type = "gp3"

size = 60

snapshot_id = nonsensitive(lookup(local.example_snapshot_assignments, "example02", null))

tags = {

Name = "example02_data_disk",

}

}

# EBS Attachment

resource "aws_volume_attachment" "example02" {

device_name = "/dev/sdh"

volume_id = aws_ebs_volume.example02.id

instance_id = aws_instance.example02.id

}

data "aws_ssm_parameter" "example_snapshot_assignments" {

name = "example_snapshot_assignments"

}

data "aws_ssm_parameter" "example_ami_assignments" {

name = "example_ami_assignments"

}

locals {

example_snapshot_assignments = jsondecode(data.aws_ssm_parameter.example_snapshot_assignments.value)

example_ami_assignments = jsondecode(data.aws_ssm_parameter.example_ami_assignments.value)

}

Where the contents of each SSM parameter is something like this for initial deployment:

example_ami_assignments:

{

"example02":"ami-123123123123"

}

example_snapshot_assignments:

{}

And can be updated with the following process to recover from a backup:

- Find the most recent AMI generated for the target instance, either in the backup vault or the Protected resources page

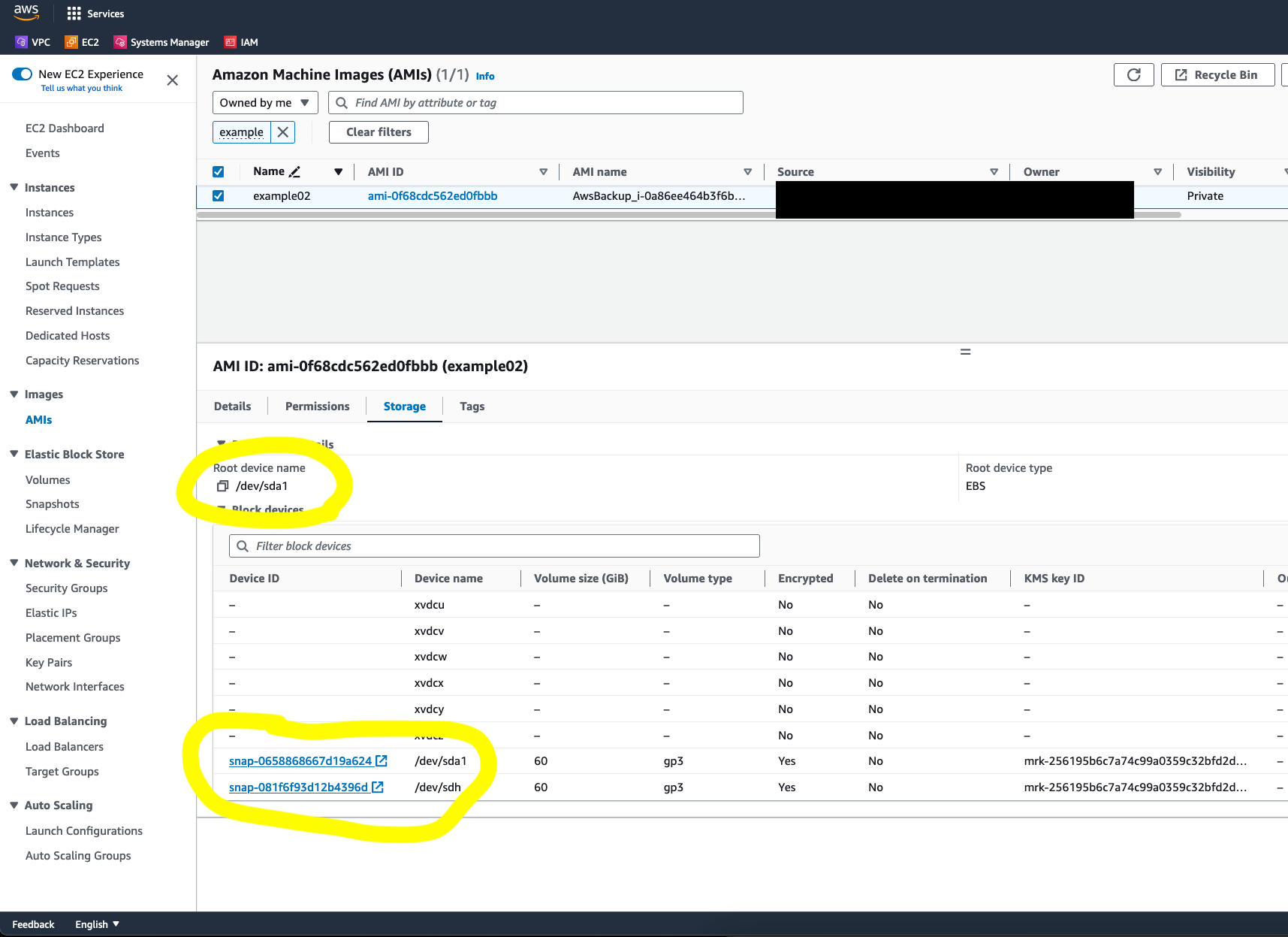

- Copy the AMI ID of this AMI, and go into the EC2 Console and look up the details of this AMI under Images -> AMIs.

- Under the storage tab of the Image Summary page, locate the Root device name

- Using the root device name, click on the snapshot corresponding to the root device

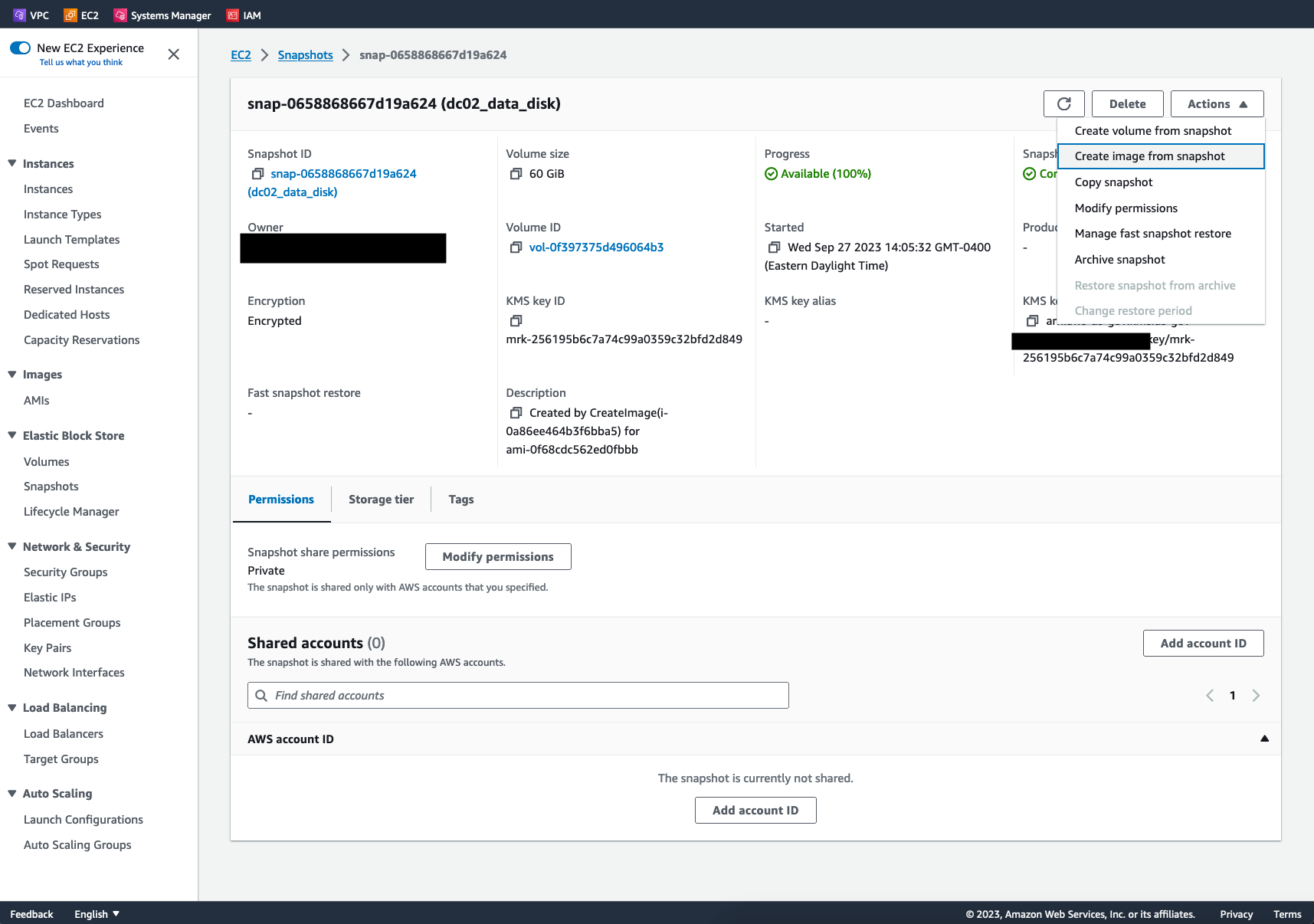

- In the Snapshot summary page, click Actions -> Create Image from Snapshot

- Enter in a name and description which identifies this new AMI and its creation date and purpose

- After Creating the image, copy the new image's AMI ID - This is the AMI to use in the AMI SSM parameter

- Locate the parameter that governs the ami id of the instance in question and paste the new image ami id into the parameter

- Under the storage tab of the Image Summary page, locate the second volume in the backup,

/dev/sdh, note its snapshot ID, this is what we need to populate in our snapshot SSM parameter - Run a Terraform apply

So for me, my two new SSM parameters would look something like:

example_ami_assignments:

{

"example02":"ami-99999999" #(This is the AMI that I created in step 7)

}

example_snapshot_assignments:

{ "example02": "snap-081f6f93d12b4396d" } (value from step 10, the snapshot ID of the second volume)

And I would see:

Plan: 3 to add, 0 to change, 3 to destroy.

# aws_ebs_volume.example02 must be replaced

-/+ resource "aws_ebs_volume" "example02" {

...

# aws_instance.example02 must be replaced

-/+ resource "aws_instance" "example02" {

~ ami = "ami-00b8d25dfa8848c0b" -> "ami-0ff3af34fab7f457b" # forces replacement

...

# aws_volume_attachment.example02 must be replaced

-/+ resource "aws_volume_attachment" "example02" {

Conclusion

Using this method allows us to utilize AWS Backups to recover an instance from backup, but still keep the instance definition in Terraform. Its not a great method, but its the least bad option we've found.